- #INSTALL APACHE SPARK LOCAL INSTALL#

- #INSTALL APACHE SPARK LOCAL DOWNLOAD#

- #INSTALL APACHE SPARK LOCAL FREE#

You can type `sc` on a cell to confirm that. This is because Databricks does this for you by default. Notice that you didn’t have to create a SparkContext. df = ( '/FileStore/tables/heart.csv',sep= ',',inferSchema= 'true',header= 'true') Also, make sure your notebook is connected to the cluster. Note the path to your dataset once you upload it to Databricks so that you can use it to load the data. When uploading the data, it needs to be less than 2GB.įor the sake of illustration, let’s use this heart disease dataset from UCI Machine Learning. Databricks lets you upload the data or link from one of their partner providers. Next, you will need to define the source of your data. When your cluster is ready, create a notebook. The notebook that’s needed for this exercise will run in that cluster. Once you log in to your Databricks account, create a cluster.

#INSTALL APACHE SPARK LOCAL INSTALL#

This module ships with Spark, so you don’t need to look for it or install it. You can do machine learning in Spark using `pyspark.ml`. 📚 Pandas Plot: Deep Dive Into Plotting Directly With Pandas Machine Learning in Spark However, all the other alternatives we mentioned will suffice. This article will assume that you’re running Spark on Databricks.

#INSTALL APACHE SPARK LOCAL FREE#

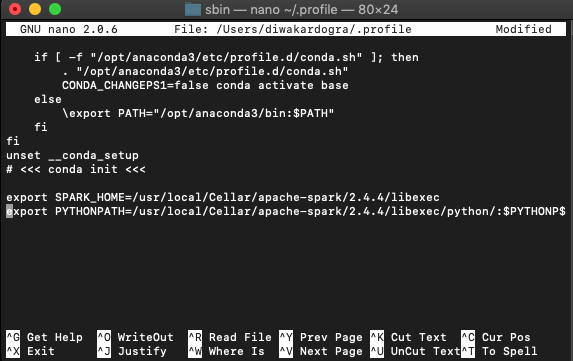

The only thing you need to do is set up a free account. The most hassle-free alternative is to use the Databricks community edition. Sc = pyspark.SparkContext() Running Apache Spark on Databricks When you navigate to the Notebook, you can start a new SparkContext to confirm the installation. $ docker run -p 8888: 8888 jupyter/pyspark-notebook Once this is done, you will be able to access a ready notebook at localhost:8888. The next step is to pull the Spark image from Docker Hub. You will need to have Docker installed in order to install Spark using a container. It’s just easier and quicker to use a container to run Spark’s installation on your local machine. It might not be worth the effort, since you won’t actually run a production-like cluster on your local machine. How can you install Spark on your local machine? This process can get very complicated, very quickly.

Sc = pyspark.SparkContext() Installing Apache Spark on your local machine Create an instance of the `SparkContext` to confirm that Spark was installed successfully. You need to create the `SparkContext` any time you want to use Spark. Next, use `findspark` to make Spark importable. Os.environ = "/usr/lib/jvm/java-8-openjdk-amd64" Next step, set some environment variables based on the location where Spark and Java were installed. !apt-get install openjdk -8-jdk-headless -qq > /dev/null

#INSTALL APACHE SPARK LOCAL DOWNLOAD#

You will also need to download Spark and extract it. `findspark` is the package that makes Spark importable on Google Colab. To run Spark on Google Colab, you need two things: `openjdk` and `findspark`.

However, if you’re a beginner with Spark, there are quicker alternatives to get started. It’s expected that you’ll be running Spark in a cluster of computers, for example a cloud environment. you can run Apache Spark on Hadoop, Apache Mesos, Kubernetes, or in the cloud.Apache Spark can be used in Java, Scala, Python, R, or SQL.it’s 100 times faster than Hadoop thanks to in-memory computation.

0 kommentar(er)

0 kommentar(er)